Increase IOPS: Efficient IOPS Provisioning on AWS

CEO and Co-founder

Cloud storage services are a highly complex discipline, with various options and pricing plans to fit almost any need. The complexity is so great, that it is easy for an organization to pay 10-20 times the cost for the service they actually need simply because they picked a suboptimal storage plan.

Here, we examine a specific type of service – Elastic Block Storage (EBS). EBS volumes are storage devices that can be attached to Elastic Cloud Compute (EC2) instances. There are several options when selecting an EBS device, all of which vary by performance and price. Clients that are interested in high performance will generally consider the Solid-State Drive (SSD) that delivers the most read/write operations. This characteristic is measured by IOPS – the number of input and output operations per second – but is also affected by bandwidth and throughput. Furthermore, EC2 instances have their own limitations on IOPS, bandwidth and throughput, making the choice also dependent on the type of machine selected.

In this study, we will focus on IOPS and show how to fully take advantage of AWS services to minimize our costs as much as possible.

EBS Volume Types

The SSD EBS volumes come in two general varieties, general purpose SSDs (gp2 and gp3) and provisioned IOPS SSDs (io1 and io2). The gpX variety is marketed by AWS for use cases like low-latency apps and development/test environments, while ioX is marketed for workloads that require sustained, I/O-intensive workloads like in databases.

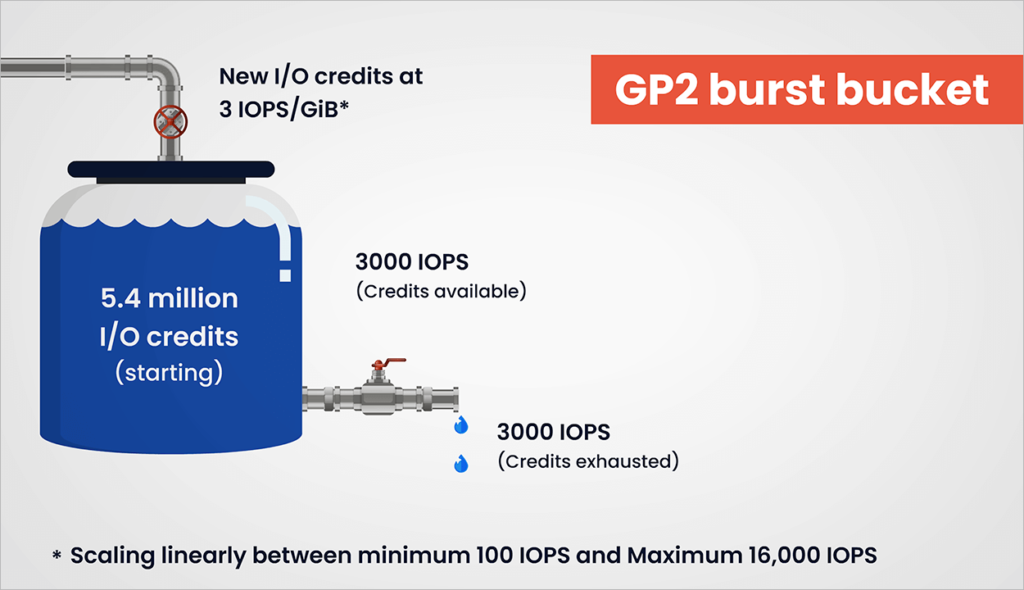

The gp2 devices are priced exclusively based on capacity ($0.10 per GiB-month in most cases) and deliver IOPS and throughput based on the capacity provisioned, with the ability to burst up to 3000 IOPS. The best way to understand how this works is using an I/O credit reservoir (or burst bucket). Each gp2 device comes with a 5.4 million I/O credit reservoir. Each I/O that is used by the device consumes a credit, and the maximum credits that can be consumed is three times the device capacity (in GiB) or 3000, whichever is greater. Simultaneously, at every second, three times the capacity (in GiB) is replenished into the reservoir up to 5.4 million credits.

This means that a 500 GiB gp2 device that sustains the maximum IOPS will deliver 3000 IOPS for one hour, deplete all of its credit (3000 IOPS consumed minus 1500 IOPS replenished will equal 5.4 million divided by 3600 seconds), and then deliver 1500 IOPS thereafter (simply the rate of replenishment). However, a 2000 GiB device will sustain 6000 IOPS because its replenishment rate is higher than the 3000 IOPS burst level.

The gp3 devices, in contrast, are priced based on capacity and sustained IOPS/throughput. Capacity costs $0.08 per GiB-month; IOPS costs 0.0005 per IOPS over 3000; throughput costs $0.04 per MiBps over 125 MiBps. This means that each gp3 device comes with 3000 sustained IOPS and 125 MiBps throughput, but additional IOPS and throughput can be provisioned at an additional cost.

When comparing gp2 with gp3, it seems that gp3 is clearly superior to gp2. For example, a 500 GiB gp2 device that delivers 3000 burst IOPS (1500 base IOPS) and 250 MiBps throughput will cost $50 per month. The same $50 will give you a 500 GiB gp3 device that delivers 4015 sustained IOPS and 250 MiBps throughput – much more IOPS. This is the case regardless of the gp2 capacity provisioned, meaning that an equally priced gp3 device will deliver more IOPS per dollar.

Finally, there are the io1 and io2 devices. Prior to gp3, the ioX devices were the only products that offered custom IOPS provisioning. Still today, they are the only products that offer IOPS at up to 64,000 IOPS – whereas the gpX devices are cut off at 16,000 IOPS. Furthermore, they offer very low latency when connected to EBS-optimized EC2 instances, and in the case of io2, the durability rating is 99.999% per year, compared to 99.9% per year that is delivered by the other EBS devices. However, ioX devices carry a hefty price, even when the provisioned IOPS is comparable to that of gp3 devices. For example, a 500 GiB io1 or io2 device with 3000 provisioned IOPS will cost $257.5 per month, and with 16000 provisioned IOPS will cost $1102.5 per month. Compare this with the cost of a gp3 device at $74.82 and $139.82 per month, respectively (both provisioned with 1000 MiBps of throughput).

Bursting and Multi-Device Advantage

The ability to burst IOPS presents an interesting opportunity to optimize filesystems for many use cases. Moreover, filesystem partitions that span multiple disks further adds to the variety of use cases. We will focus our study on Linux and the BTRFS filesystem.

BTRFS is a copy-on-write filesystem that allows us to resize filesystems and add/remove disks, all without interrupting the regular operations of the operating system. When we serialize multiple block storage devices to a single BTRFS filesystem then we have the ability to write to multiple devices near-simultaneously and tap into larger aggregate IOPS levels.

For example, suppose I have two 50 GiB gp2 devices with 150 baseline IOPS, each. Then by serializing the two devices, we ultimately get a 100 GiB gp2 device with 300 baseline IOPS. But that is not all. Each device has its own burst bucket, and thus, we end up with double the credits and twice the maximum credit reserves when compared to using a single device. That means that we can get 6000 burst IOPS for a duration of 1895 seconds when using two 50 GiB devices, compared to having 3000 burst IOPS for a duration of 2000 seconds when using a single 100 GiB device. That is nearly twice the I/O.

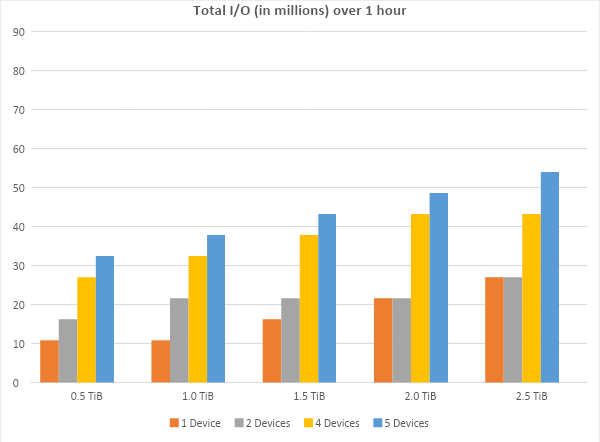

For use cases where we need lots of IOPS only during a few periods in the day, this is a terrific value proposition. For example, using one 500 GiB device, we can burst about 11 million I/O over a one-hour period, but we get about 16 million I/O if we use two 250 GiB devices, and 27 million I/O if we use four 125 GiB devices over the same one-hour period. We expect similar performance boosts for larger filesystems, as well, but with diminishing returns because devices greater than 1 TiB receive a baseline IOPS that is greater than the burst.

Suppose you have a data store of 400 GB worth of pictures that you need to now run through an ML algorithm. You can’t load 400 GB of data into RAM, but you can use multiple serialized storage devices to remove storage bottlenecks. And you will enjoy the much cheaper cost of gp2 devices over something like an io1 device. This is just one of many situations that can benefit from burst optimization.

IOPS Testing

In this section we test our burst advantage hypothesis. We use AWS c4.2xlarge EC2s running Amazon Linux 2 64-bit x84 architecture (a fork of CentOS), and benchmark the following device configurations:

- 1x500GiB gp2 @ 50 $/month

- 5x100GiB gp2 @ 50 $/month

- 1x500GiB gp3 – 4015/250 @ 50 $/month

- 5x100GiB gp3 – 3400/125 @ 50 $/month

- 1x500GiB io1 – 5000 @ 387.50 $/month

- 1x500GiB io1 – 18000 @ 1232.5 $/month

The c4.2xlarge are EBS-optimized virtual machines that will help us push these devices to their limits in what we see as a representative choice for most AWS customers.

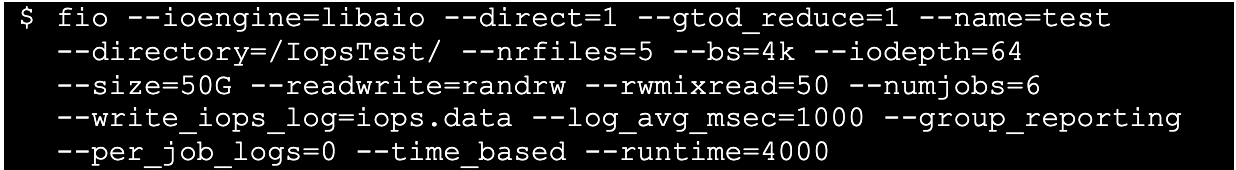

We benchmark IOPS using Flexible I/O tester (fio) with the following parameters:

The following test reads and writes 30 files, each 10 GB, in 6 parallel threads to our BTRFS filesystem mounted on ‘/IopsTest/’. The test will mix the I/O so that 50% are reads and 50% are writes. Finally, the test will run for 4000 seconds (restarting as needed), and will sample IOPS read and write data every second.

We designed the test to try and burst as much IOPS as possible, and running it for 4000 seconds gets us past the 3600 seconds of burst that we get with a new 500 GiB gp2 device.

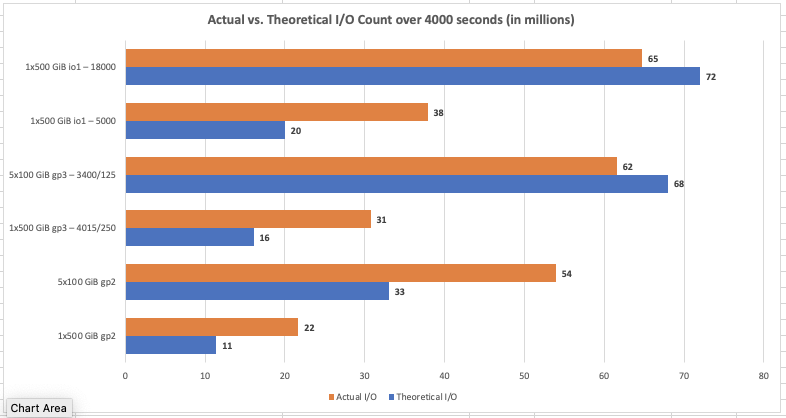

Besides sampled IOPS data, we also received total I/O read/write and average IOPS for the duration of the test. The results, by device group, were as follows:

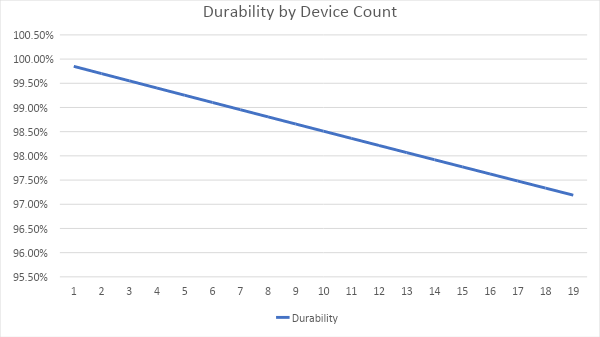

We see that gp3 devices provide superior results to gp2 devices, and comparable results to the io1 device (on a per provisioned IOPS basis). Meaning, you can get the same level of IOPS performance out of a single gp3 device for a lot less than a single io1 device of similar provisioning. We also see that when utilizing multiple devices, we can outperform the io1 device provisioned with 5000 IOPS, but for a lot less money. We do, however, pay for that in durability. As we serialize more devices for a single filesystem, our durability drops (99.85% at 1 device and 99.25% at 5 devices).

Of course, we would like to have the highest durability that we can get. But the question is at what cost? Are we willing to pay 600% more for an additional 0.6 % durability (comparing one io1 to five gp3 devices)? Well, now we have the numbers to make more informed decisions.

Finally, we see that all configurations capped performance at around 65 million operations over the test period. This is likely more a result of the instance’s burst limitation rather than the device. Meaning, that unless you are willing to pay even more for dedicated nitro machines, or if you are using the multi-attach feature of EBS then you are unlikely to see the sustained performance promised by these higher priced units, raising your cost even further.

Below we take a closer look at how IOPS were utilized during the lifecycle of the test by each configuration.

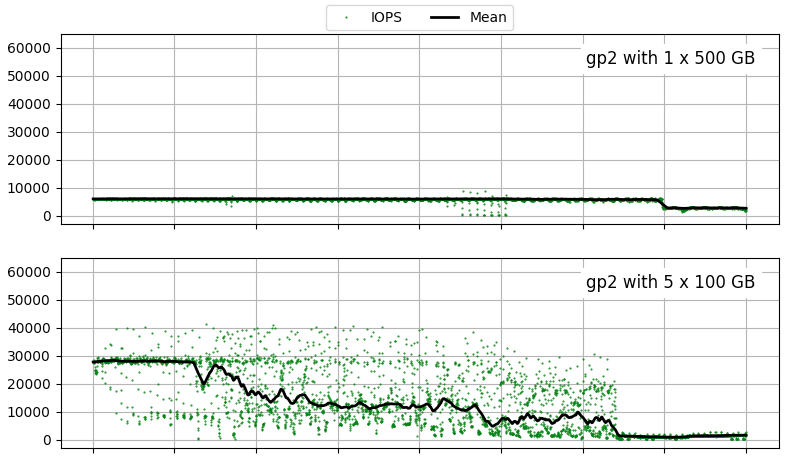

GP2

From top to bottom: 1x500GiB gp2 and 5x100GiB gp2

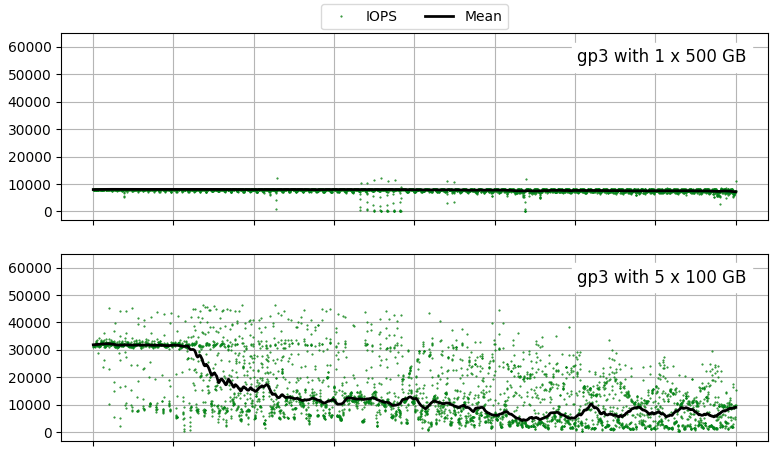

GP3

From top to bottom: 1x500GiB gp3 and 5x100GiB gp3

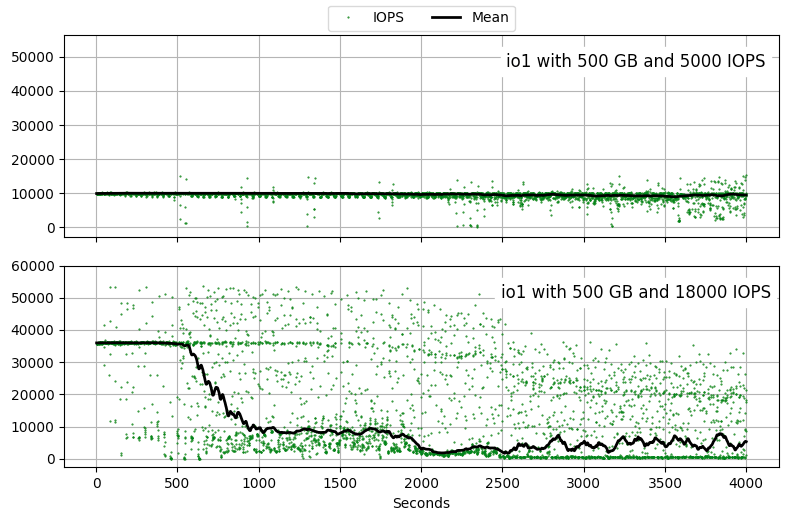

IO1

As expected, we see that serialized configurations delivered exceptional IOPS performance for a burst duration compared to single EBS configurations. Furthermore, single device configurations sustained a steady IOPS for longer, with gp2 exhausting burst credits at the 1-hour mark as expected.

However, regardless of the configuration, we see that even the io1 configuration provisioned with 18000 IOPS significantly dropped in performance. This confirms our hypothesis that we have exhausted our EC2’s burst credit pool.

Finally, we see that on single EBS configurations, we are achieving twice the provisioned IOPS. That is possible if read and write operations each used a separate credit pool, or if there is a bug in the fio command. The latter case simply means that we can’t trust the absolute values of the fio test, but we can still trust the relative performance results.

The IOPS Advantage with Zesty

In summary, we have shown that:

- It is possible to achieve significantly more IOPS provisioning by utilizing multiple EBS devices in serial configuration.

- The cost of serializing devices is durability or money. Since we have the numbers for the configurations in this article and many others as well, we can do a cost benefit analysis that has not been seen before in the cloud industry.

- The cost of provisioning more IOPS using an ioX device is only really necessary when you need prolonged sustained performance, or very low latency, in which case you will also need to provision for the higher priced nitro instances.

Zesty Disk currently automates storage provisioning so that our clients don’t have to pay for more storage than they actually need. In future releases of Zesty Disk, we plan to include dynamic IOPS provisioning so that clients do not have to pay for more IOPS than they need. The tests performed in this article are the kinds of tests we perform to get a deeper understanding of the physical limitations of the architecture so that we can squeeze every last bit of performance for our clients.

Interested in learning more about Zesty Disk? Contact one of our cloud experts now!

Related Articles

-

Zesty introduces Insights: actionable recommendations for immediate cloud savings

Zesty introduces Insights: actionable recommendations for immediate cloud savings

July 24, 2024 -

Zesty introduces Commitment Manager for Amazon RDS!

Zesty introduces Commitment Manager for Amazon RDS!

July 10, 2024 -

How to clear budget for AI implementation?

How to clear budget for AI implementation?

June 18, 2024 -

10 recent AWS updates you need to know about

10 recent AWS updates you need to know about

May 9, 2024 -

How to maximize your reserved capacity utilization

How to maximize your reserved capacity utilization

May 1, 2024