The Hidden Costs of AWS Storage Services: Explained

Tech Evangelist

This article was originally published on Spiceworks.

Cloud computing is an innovative technology, but the cost of working in the cloud can be more than we expect sometimes. Despite the growing number of cloud service providers, engineering teams experience unstable prices for the same services they use monthly, leading to 35% of wasted cloud spend.

Enterprise organizations in particular are feeling this pain. Pinterest experienced a 41% higher AWS bill in 2018 versus 2017 due to having to purchase extra capacity at a higher cost despite the fact they had paid for capacity in advance. Furthermore, a 2021 report by Virtana indicated that 82% of 350 companies based in the US and UK experience “unnecessary” cloud costs.” The same report noted a Gartner prediction that “60 percent of infrastructure and operations leaders will encounter cloud costs overruns by 2024.”

There are multiple cases, reported or not, where unnecessary cloud spend burns holes in a company’s technical purse.

This article will help you identify the culprits responsible for cloud waste on AWS and teach you how to nip them in the bud. We’ll also discuss how S3 pricing is calculated and some general cost optimization tenets.

Brief Overview of AWS Storage Services

On the AWS cloud platform, customers can take advantage of a pay-as-you-go model, meaning customers only pay for the individual resources and services they consume for a period of time.

The price of using AWS’ state-of-the-art cloud tools depends on three factors: compute, storage, and outbound data transfers. In this article, we’ll discuss storage services with hidden costs, which we’ll classify as our primary “culprits.” The several AWS storage services include:

- Amazon Simple Storage Services (S3)

- Amazon Elastic Block Store (EBS)

- Amazon Elastic File System (EFS)

- Amazon FSx for Lustre

- Amazon S3 Glacier

- Amazon FSx for Windows File Server

- AWS Storage Gateway

However, as S3 and EBS are the most commonly used, we will focus on them when discussing AWS culprits below. Also, this article will dive deeper into Amazon S3 since it has become the standard storage service for developers due to being more cost efficient and durable.

Amazon S3 Pricing

It’s essential to know that for the S3 service, you only pay for the storage capacity you use, not the capacity provisioned. In other services, you pay for the resources provisioned, whether or not you used them.

Typically, there are six cost components for the S3 service:

- Storage: You pay for storing objects in S3 buckets.

- Requests and data retrievals: Your cost depends on the type of requests made against your S3 buckets, with DELETE and CANCEL exceptions.

- Data transfer: You pay for the bandwidths that come in and go out of the S3 service, with some exceptions.

- Management and analytics: You pay for storage management features and the enabled analytics.

- Replication: Your cost depends on the class of region replication you choose.

- S3 Object Lambda for processing data: An S3 Object Lambda lets your S3 GET request call a defined AWS Lambda function to process data and send it back as a processed object to your app.

To learn more about these, you can visit the Amazon S3 pricing page.

How S3 Costs Are Calculated

AWS S3 service costs can be grouped into three major categories:

- The standard storage service is charged hourly in Gigabytes per month, at $0.023/GB/month for the first 50 Terabytes; this decreases as you consume more Terabytes of storage.

- The standard API costs for file operations per 1,000 requests is $0.0004 for read requests and $0.005 (10x more) for write requests.

- The standard cost of transferring data outside an AWS region is $0.02/GB/month and $0.09 per Gigabyte to the internet for the first 10 Terabytes; this decreases as you transfer more Terabytes of storage.

To learn more about these, you can visit the Amazon S3 pricing page.

S3 Culprits and How to Optimize Them

There are several hidden culprits and techniques for optimizing AWS S3 cloud spend.

Compute and Storage Location

Having an Amazon EC2 instance and your S3 service set to different AWS regions reduces the performance overhead and increases the cost of data transfer. You can transfer data for free when the EC2 instance and the S3 bucket are set to the same region.

If your data transfer usually includes another region, replicating the S3 bucket might be the best way to optimize spend. Keep in mind that replicating the bucket may become expensive if the objects and total size are large, or there are too many replications. Also, replication may lead to inconsistency in data. If there is high traffic in downloads from the S3 servers, then migrate cacheable files to AWS CloudFront since it is more cost effective and performant than S3 in most cases.

Not Monitoring

Setting up CloudWatch alerts is important for monitoring usage metrics. Furthermore, you can also enable Cost Explorer or download reports about your S3 buckets in order to stay informed about your usage costs.

Keeping Irrelevant Files

Remember to delete files when they are no longer relevant. Also, if you have any relevant files that are not currently needed but can be recreated with relative ease, delete them too. Confirm that there are no incomplete uploads as well; if there are, delete them since if you leave them, you pay for them. The S3 lifecycle helps you automate this process by enabling you to configure the lifecycle per various policies. In addition, this feature also lets you retain data at a reduced cost.

S3 Versioning

AWS charges for every version of a data object that is stored and transferred. Replications of an object are complete objects by themselves. If you have multiple versions of an object, you will be billed for them all.

Large Files

Compressing objects delivers better performance, reduces the storage capacity required, and, in turn, lowers costs. Depending on the use case, compressions such as LZ4, GZIP, or even ZSTD provide good performance and take up minimal space.

API Calls Remain the Same

Whether you’re uploading a large object or a smaller one, the API cost is not dependent on the data size but on the type of call. That means you can only optimize it by uploading in bulk instead of in chunks, so you may have to batch or zip different files to create a single file for upload.

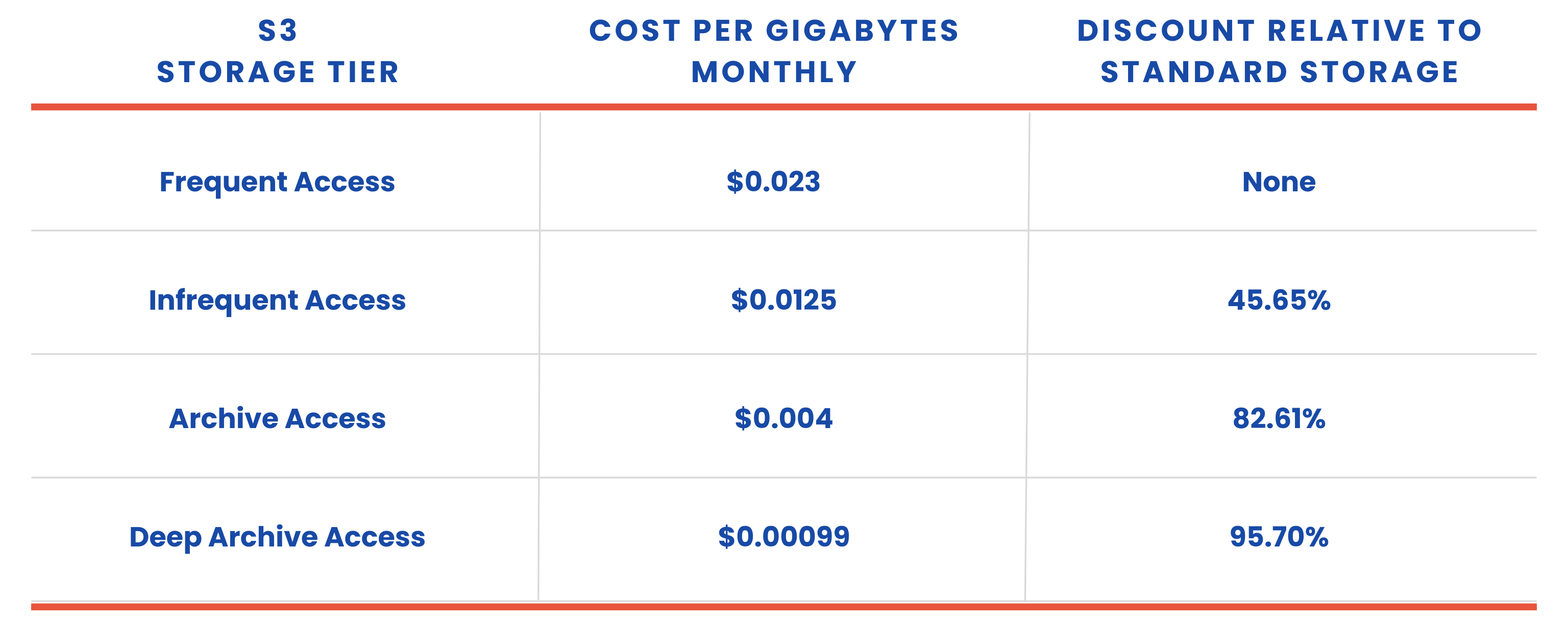

S3 Intelligent Tiering

Transitioning objects from the standard S3 service to the S3 Intelligent Tiering service attracts an additional fee. Depending on the access patterns of your objects, you may or may not be saving costs by using Intelligent Tiering.

Data stored in Infrequent Access (IA) requires a retrieval fee of $0.01 per GB, but this is 4x cheaper than using S3 storage even though retrieval is free there. IA is the best option for you if you retrieve your data objects just once a month at most. Also, you can use IA for disaster recovery.

Data stored in the Frequent Access tier doesn’t save any cloud costs. It is noteworthy that S3 has a monitoring fee of $0.0025 per 1,000 data objects in a month. The formula for this is:

Monitoring costs per month = (number of objects with Intelligent Tiering/1000 objects) * $0.0025

Note that transitioning objects to the lower tiers comes with tradeoffs like reduced replications and SLA, as well as a longer retrieval time for the lowest tiers. You should also note that there is a fee for transitioning data objects between two storage classes at a rate of $0.01 per 1,000 objects:

Transition costs per month = (number of data objects being transitioned / 1000 objects) * $0.01

AWS Key Management System Alternative

Your cloud bill increases when you use Amazon S3 with the AWS KMS service because every data object requires a KMS encryption operation to write and a decryption operation to read. To reduce this cost, make use of the Amazon S3 Bucket Keys instead. This provides as much as a 99% reduction in unnecessary spend, without any changes to dependent applications.

It is worth knowing that you are billed for disabled KMS keys on your account, as well as the active keys. So, consider deleting disabled keys if they don’t adversely affect services configured with those keys.

Amazon Elastic Block Store (EBS)

Amazon EBS is made up of volume storage and snapshots. The storage volumes can be attached to EC2 instances to carry out block storage tasks. The snapshots provide an easier and secure way to protect stored data such as data volumes, boot volumes, non-EBS volumes, and block data. For this reason, the pricing for EBS volumes is different from that of EBS snapshots.

The standard cost for snapshots is $0.05/GB/month. The cost for the archive is $0.0125/GB/month.

Optimizing AWS EBS Culprits

Optimizing your AWS EBS cost is a straightforward process that can be categorized into two parts.

Deleting Independent Volumes and Old Snapshots

Deleting a volume significantly reduces your cloud storage spend since EBS volumes are priced based on the amount of storage used. Contrarily, deleting a snapshot might not result in any reduction in cloud storage spend since only the data referenced by the snapshot is deleted.

Even after stopping an instance, the volumes configured to those instances still retain information and continue to add to your bill. To stop EBS charges, consider deleting any volume or snapshot you’re not using.

Emptying the Recycle Bin

You pay for the EBS storage capacity provisioned to you. This can be either a volume, a snapshot, or in the recycle bin. The recycle bin secures snapshots that were deleted unintentionally. For this reason, snapshots in the recycle bin cost the same as a normal snapshot. So remember to delete the contents of the recycle bin to confirm deleted snapshots.

General Recommendations

Giving inexperienced users access to perform operations with the S3 service can also warrant unnecessary costs. A good many developers have spawned demo instances but failed to turn them off after usage. It is advisable to set down and follow procedures while working with cloud infrastructure, as well as promote a culture of ownership towards created resources.

When there is an AWS service that is not being used for a long period of time, it’s always best to turn it off or delete it if possible, as it may be contributing to wastage without your knowledge.

Define and use monitoring metrics where possible. Also, endeavor to check these metrics regularly to avoid surprises. You can leverage alerts and notifications frequently to avoid waking up to outrageous bills. It might be a good idea to build and train a team to architect and manage your cloud spend. Finally, a culture of retention-for-everything and backup in lower S3 tiers is beneficial to implement from the beginning, as it’s sometimes impossible to tell which data object is useful in the long term.

Looking for an easier way to manage cloud storage costs? Find out how Zesty Disk can cut EBS costs by up to 70%! Contact one of our cloud experts to learn more.

Related Articles

-

Zesty introduces Insights: actionable recommendations for immediate cloud savings

Zesty introduces Insights: actionable recommendations for immediate cloud savings

July 24, 2024 -

Zesty introduces Commitment Manager for Amazon RDS!

Zesty introduces Commitment Manager for Amazon RDS!

July 10, 2024 -

How to clear budget for AI implementation?

How to clear budget for AI implementation?

June 18, 2024 -

Ensure precision in cloud resource allocation: Control mechanisms you need

Ensure precision in cloud resource allocation: Control mechanisms you need

June 16, 2024 -

Straightforward cloud cost analysis: Clear Insights to move the needle

Straightforward cloud cost analysis: Clear Insights to move the needle

June 11, 2024