How to Master Databases to Get More from Your Kubernetes Clusters

Principal DevOps Engineer

Kubernetes is the de facto container orchestration system to run scalable and reliable microservices. Due to its modern, cloud-native approach, Kubernetes is one of the key technologies that brought about significant improvements in software development. Because of this, more and more applications have migrated to Kubernetes, including but not limited to databases.

In a traditional database, you store, manage, and serve the data required for your applications, customers, and analyses. Users, frontends, and other applications can connect to the database to query data. With the cloud-native approach, applications have become smaller and more numerous, leading to changes in how databases are used.

This blog will focus on running databases in Kubernetes and discuss the related advantages, limitations, and best practices.

Running Databases on Kubernetes

Kubernetes and containers were initially intended for stateless applications where no data is generated and saved for the future. With the technological development in data center infrastructure and storage, Kubernetes and containers are now also used for stateful applications such as message queues and databases.

There are two mainstream options for running databases on Kubernetes: StatefulSets and sidecar containers.

StatefulSets

StatefulSets are the native Kubernetes resources to manage stateful applications. They manage pods by assigning persistent identities for rescheduling and storage assignments, ensuring that pods always get the same unique ID and volume attachment when scheduled to another node. This sticky characteristic makes it possible to run databases on Kubernetes reliably and with the ability to scale.

Still, although Kubernetes does its best to run databases, deploying and scaling them is not straightforward. When you check the additional resources to deploy a MySQL Helm chart, you will see configmaps, services, roles, role bindings, secrets, network policies, and service accounts. Management of these Kubernetes resources with updates, backups, and restores can quickly become overwhelming.

There are three vital resources for deploying databases on Kubernetes:

- ConfigMaps store the application configuration; you can use them as files, environment variables, or command-line arguments in pods.

- Secrets are the Kubernetes resources for storing sensitive data like passwords, tokens, or keys. These do have one critical drawback: They are stored unencrypted in the underlying data store (etcd). Check out this link to learn how to secure secrets in Kubernetes installations, such as using an external secrets manager.

- Kubernetes Service resources allow other applications running in the Kubernetes cluster to connect and use the database instances.

Luckily, you do not need to create all these resources by yourself. There are widely used Helm charts to deploy popular database installations—including MySQL, PostgreSQL, or MariaDB—as StatefulSets with a wide range of configuration options.

Sidecar Containers

Here, Kubernetes pods encapsulate multiple containers and run them together as a single unit. This encapsulation and microservice architecture support creating small applications that focus on doing one thing—and doing it the best. This makes the sidecar pattern a popular approach for separating the main container from the business logic and adding additional sidecar containers to perform other tasks, such as log collection, metric publishing, or data caching.

Running sidecars next to the main applications comes with a huge benefit: low (even close to zero) latency. On the other hand, since there is not a centralized database, the consistency of the data between database instances will be extremely low. Therefore, it is highly beneficial to deploy cache databases such as Redis as sidecar containers. Because it’s an in-memory cache, you can add a Redis container to each instance of your application and deploy it to Kubernetes.

Reliability in Chaos

It is possible to run almost every kind of application on Kubernetes, including all major databases. However, you need to check some critical database characteristics against the high volatility—i.e., chaos—in Kubernetes clusters. In other words, it is common to see nodes go down, pods being rescheduled, and networks being fragmented. You need to ensure that the database you deploy on Kubernetes will resist these events and have the following characteristics.

Failover and Replication

It is common in Kubernetes to see some nodes being disconnected. If you have database instances running on these nodes, you and other database instances will lose access to them. Therefore, the database should support failover elections, data sharding, and replication to overcome any risks of data loss.

Caching

Kubernetes is designed to run a high number of small applications; this means that databases with more caching and small data layers are more appropriate to run on the clusters. A well-known example is the native approach of Elasticsearch with its sharing of indices across instances in the cluster.

Operators

Kubernetes and its API are designed to run with minimal human intervention. Because of this, it is beneficial to use a database with a Kubernetes Operator to handle configurations, the creation of new databases, scaling instances up or down, backups, and restores.

Managed Database vs. Database on Kubernetes

Sharing the same infrastructure and Kubernetes cluster for stateless applications and databases is tempting, but there are some factors you need to take into account before choosing this route. We’ve already discussed the Kubernetes-friendly characteristics your database should have, but there are additional features required.

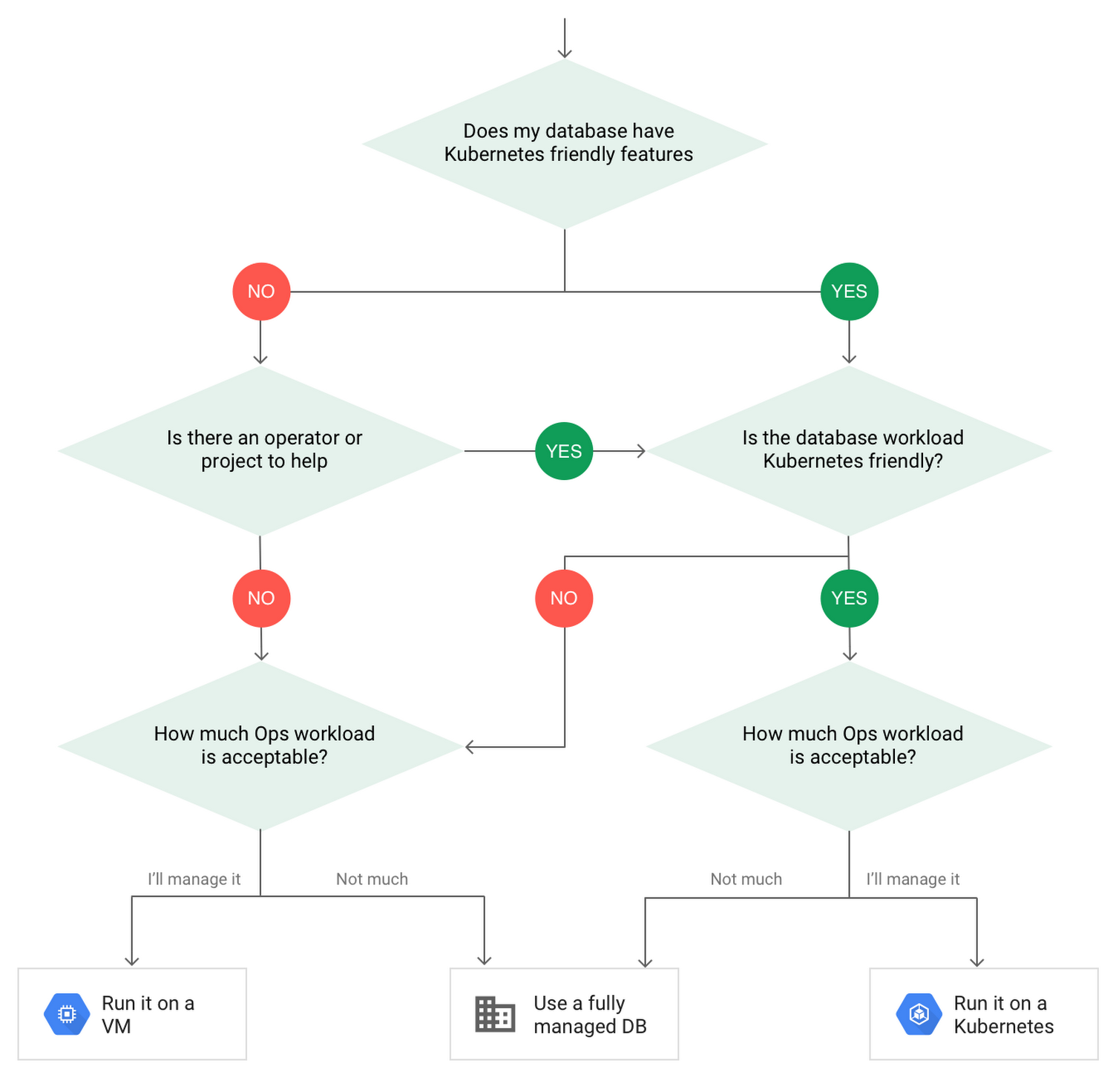

Google Cloud suggests the following decision tree to choose between running a database on a VM, running a database on Kubernetes, or using a fully managed database service:

Figure 1: Decision tree for DBs (Source: Google Cloud)

If you answered the first question with a “yes” and ensured that the database has Kubernetes-friendly features, you’ll need to next consider the database workload and consequences.

If you expect high resource usage, running on a Kubernetes cluster could be pricey compared to a managed service. On the other hand, running on Kubernetes could be a better option if you need a real-time and latency-critical database.

Finally, you need to consider the operational requirements and your team structure. Databases have their own lifecycles, with patches, maintenance windows, and backups. Patches are inevitable and especially essential for security, as you need them to comply with security policies and certifications. Even if you use package managers or operators, you need to manually find the next version, which includes security patches.

In a Kubernetes installation, you’re responsible for handling and managing these operations, whereas in a managed service, the cloud provider performs these functions for you. Using a managed database service could also be beneficial for your applications if you have critical performance indicators or SLAs.

In short, you need to consider your requirements, budget, and operational capabilities before diving into a database installation on Kubernetes.

Best Practices for Running Databases on Kubernetes

If you decide that Kubernetes is the best place to run your database, there are some best practices you should follow:

- Scalability: You should consider the horizontal and vertical scalability of your database in detail. It is suggested to use StatefulSets for horizontal scalability, and Kubernetes features such as vertical Pod autoscalers to extend for CPU and memory usage.

- Operations: Automation is vital for a successful Kubernetes installation, and it is suggested to deploy Kubernetes Operators first and then create database instances as Kubernetes custom resources. However, if your databases are constantly built and destroyed, custom resources are not the best option since they can create residual Kubernetes resources in the cluster.

- GitOps and configuration as code: Store every change in the source repository, and, following GitOps principles, deploy automatically when developers make changes.

- Monitoring, visibility, and alerts: Ensure that you collect metrics and logs and create alerts based on usage, user access, and database health.

- Troubleshooting with tools and playbooks: Failovers are unavoidable, and you need to be prepared to take action with predefined playbooks, tools, and helper scripts.

Conclusion

Kubernetes is the latest game-changer in cloud-native software development to run stateless applications and databases. However, where you deploy your database depends on your requirements, budget, and operational capabilities. If you don’t have solid use cases, it may not be worth it to jump on the database-on-Kubernetes wagon simply due to the hype or for a single-line Helm installation.

If you do opt for Kubernetes, there is no silver-bullet approach. However, with the best practices and database characteristics discussed above, you should be able to successfully design your database deployments running on Kubernetes clusters.

If you need a professional opinion to analyze and design your database setup and Kubernetes clusters, you can contact one of our cloud experts to give Zesty a try!

Related Articles

-

Optimizing Storage for AI Workloads

Optimizing Storage for AI Workloads

December 19, 2023 -

How to Improve Stateful Deployments on Kubernetes

How to Improve Stateful Deployments on Kubernetes

December 11, 2023 -

Improving Storage Efficiency for Solr & Elasticsearch

Improving Storage Efficiency for Solr & Elasticsearch

November 6, 2023 -

Finding the Right Balance Between Cost and Performance in Your Public Cloud

Finding the Right Balance Between Cost and Performance in Your Public Cloud

July 16, 2023 -

Ensuring Optimal Resource Utilization for Data Streaming Workloads

Ensuring Optimal Resource Utilization for Data Streaming Workloads

July 13, 2023