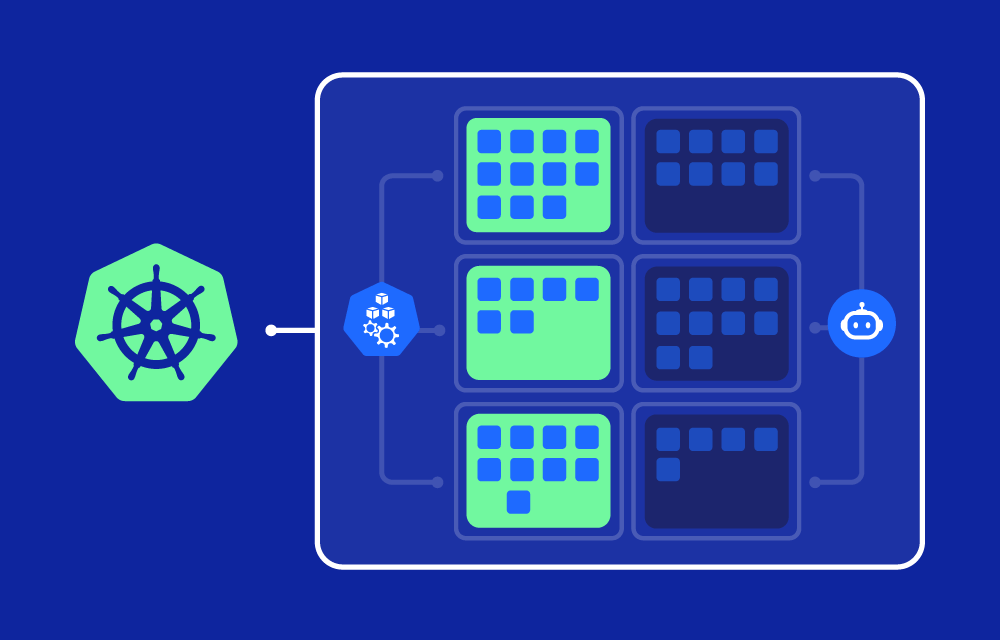

How Node Autoprovisioning Works:

Node autoprovisioning is typically managed by a Cluster Autoscaler. The Cluster Autoscaler monitors the resource requests made by pods and determines if the existing nodes in the cluster have enough capacity to handle the current workload. If there are unschedulable pods (i.e., pods that cannot be placed on any node due to resource constraints), the autoscaler will automatically provision new nodes to accommodate them.

Key operational steps include:

- Monitoring Workload Demand:

The Cluster Autoscaler continuously monitors the cluster for unschedulable pods. These are pods that cannot be placed on existing nodes due to insufficient resources (e.g., CPU, memory). - Dynamic Node Provisioning:

When it detects unschedulable pods, node autoprovisioning kicks in and adds new nodes to the cluster, selecting appropriate instance types and sizes based on the resource requests of the pending pods. - Node Deprovisioning:

When workloads decrease and nodes are underutilized, the autoscaler can also deprovision (remove) unnecessary nodes to optimize resource usage and reduce costs. This prevents idle nodes from running unnecessarily, ensuring the cluster remains cost-effective. - Cloud Provider Integration:

Node autoprovisioning is tightly integrated with cloud providers like AWS, Google Cloud, and Azure. When more resources are needed, the Cluster Autoscaler can request new nodes from the cloud provider, scaling the infrastructure dynamically based on demand.

Benefits of Kubernetes Node Autoprovisioning:

- Automatic Scaling:

Node autoprovisioning ensures that the cluster scales dynamically as workloads increase or decrease. This reduces the need for manual intervention in managing node capacity and ensures that the cluster always has the right number of nodes to meet demand. - Cost Optimization:

By automatically adding nodes when needed and removing them when they are no longer necessary, node autoprovisioning optimizes resource usage and helps reduce cloud infrastructure costs. This avoids paying for idle resources. - Improved Performance:

By automatically provisioning new nodes in response to workload spikes, Kubernetes ensures that applications have sufficient resources to run efficiently, preventing bottlenecks or performance issues due to resource constraints. - Flexibility and Adaptability:

Node autoprovisioning allows Kubernetes clusters to adapt to unpredictable workloads, making it ideal for environments with fluctuating demand, such as seasonal traffic spikes or large-scale data processing tasks.

Use Cases for Node Autoprovisioning:

- E-commerce Websites:

During peak shopping seasons, e-commerce platforms often experience traffic surges. Node autoprovisioning ensures that the cluster can automatically scale out to handle the increased traffic and scale back in during quieter periods, optimizing resource use and performance. - Data Processing Pipelines:

In environments where large-scale data processing is required, such as ETL pipelines or machine learning workloads, node autoprovisioning ensures that the cluster can scale dynamically to accommodate batch processing tasks and scale down once processing is complete. - CI/CD Workflows:

Continuous integration and continuous delivery (CI/CD) workflows often require variable resources during build and deployment processes. Node autoprovisioning allows Kubernetes to automatically add nodes for builds or tests and then remove them when the tasks are complete.

Tools Supporting Node Auto-provisioning:

- Kubernetes Cluster Autoscaler:

The Cluster Autoscaler is the primary tool for managing node autoprovisioning in Kubernetes. It monitors unschedulable pods and adjusts the number of nodes in the cluster to meet current resource demands. The autoscaler integrates with cloud providers like AWS, GCP, and Azure to automatically provision new instances when needed. - Karpenter:

Karpenter is an open-source Kubernetes node provisioning tool designed to replace or complement the Cluster Autoscaler. It intelligently provisions nodes based on workload requirements and provides faster scaling with more flexibility in choosing instance types and sizes.

Challenges of Node Autoprovisioning

- Resource Allocation Complexity:

Deciding the optimal number and size of nodes can be challenging, particularly in large clusters with diverse workloads. Improper configuration of resource limits and requests may lead to inefficient scaling or under-provisioning. - Cost Management:

While node autoprovisioning optimizes resource usage, it can also lead to unexpected costs if not properly monitored. Using cloud cost management tools or implementing FinOps practices helps keep cloud spending under control during dynamic scaling events. - Scaling Delays:

Depending on the cloud provider and instance availability, there may be delays in provisioning new nodes, which could impact workload performance during sudden spikes in demand.

References:

- Kubernetes Cluster Autoscaler Documentation

- Karpenter – Open Source Kubernetes Autoscaler

- Kubernetes Documentation: Managing Compute Resources