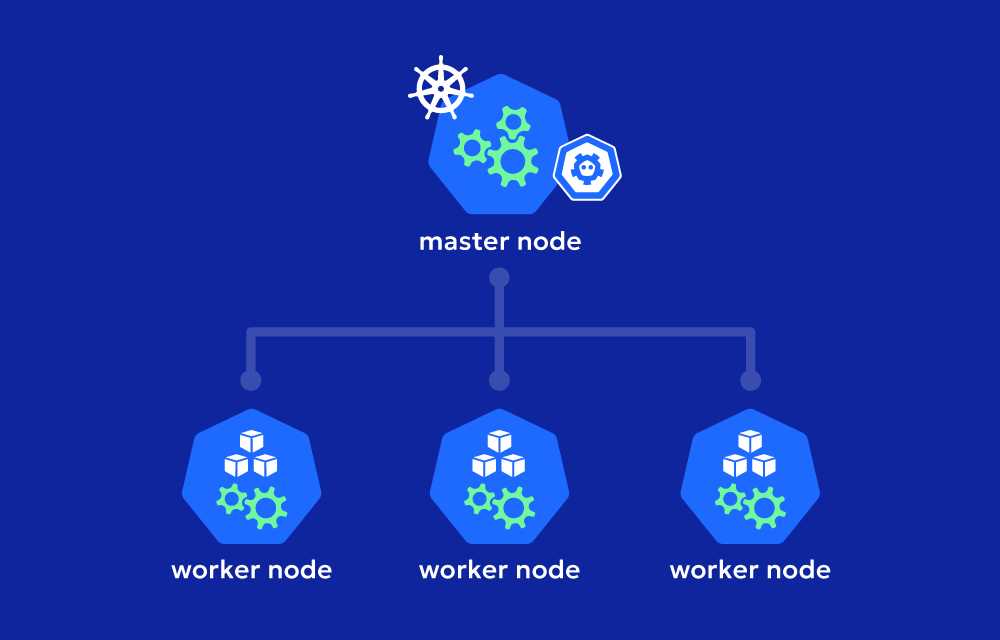

1. Master Nodes (Control Plane Nodes)

- The master node is the brain of the Kubernetes cluster. It manages and controls the entire cluster, orchestrating the deployment and lifecycle of applications across worker nodes. The master node’s job is to make decisions about scheduling, monitoring the state of the cluster, and handling all the management tasks.

- The master node hosts critical components that manage the cluster, including:

- API Server: Acts as the frontend for the Kubernetes control plane, handling all communication with the cluster.

- Controller Manager: Oversees the controllers that maintain the desired state of the cluster by managing tasks like node health, job control, and replicating pods.

- Scheduler: Decides which nodes to place newly created pods on, based on resource availability and other constraints.

- etcd: A distributed key-value store that stores all the data required to manage the cluster. It’s the “source of truth” for the cluster state.

Key Responsibilities of Master Nodes

- Scheduling pods on worker nodes

- Monitoring the health and status of the cluster

- Managing configurations and cluster-wide policies

- Scaling applications based on demand

- Maintaining communication between components through the API server

2. Worker Nodes

- Worker nodes are where your actual application workloads run. Each worker node hosts the pods and ensures they have the necessary computing resources to operate effectively.

- A worker node consists of the following key components:

- Kubelet: An agent running on each node that communicates with the master node. It ensures that the pods are running as expected and can restart them if necessary.

- Kube-proxy: Handles networking, managing network rules, and enabling communication between pods, both within the node and across different nodes in the cluster.

- Container Runtime: The software responsible for running the containers (e.g., containerd, CRI-O, or Docker). It pulls container images, starts and stops containers, and interacts with other Kubernetes components.

Key Responsibilities of Worker Nodes

- Running and managing the pods assigned to them by the master node

- Maintaining network connections for communication between pods

- Ensuring the required resources (CPU, memory, storage) are available for the running pods

Cluster Architecture: How Master and Worker Nodes Work Together

In a typical Kubernetes cluster:

- Master nodes serve as the control center, orchestrating everything in the cluster. They decide where pods should run, monitor the health of the cluster, and make adjustments when necessary.

- Worker nodes are responsible for executing the tasks assigned by the master node. They run the pods, providing the resources and networking necessary for the applications.

Example

When you deploy an application to a Kubernetes cluster, the master node receives the request and decides how many pods need to be created and where they should run. The master then schedules the pods on available worker nodes, which then pull the container images, start the containers, and begin executing the workload.

Master and worker nodes work in tandem to ensure that applications run efficiently, scale correctly, and recover automatically if failures occur. This setup enables Kubernetes to maintain high availability and scalability across distributed environments.

FAQ: Nodes in Kubernetes

1. How many nodes can you have in a cluster in Kubernetes?

The maximum number of nodes in a Kubernetes cluster depends on the Kubernetes version and the configuration limits set by the cloud provider or infrastructure. As a guideline, Kubernetes can theoretically support up to 5,000 nodes per cluster, but practical limits may vary based on factors like network bandwidth, etcd performance, and control plane configuration. Most managed Kubernetes services like Amazon EKS, Google GKE, and Azure AKS have their own specific node limits.

2. How many pods can you have in a node?

The maximum number of pods per node also depends on the configuration and the node’s capacity. By default, Kubernetes sets a limit of 110 pods per node. However, this can be adjusted based on the needs of your application and the capabilities of your node. The more resources (CPU, memory) a node has, the more pods it can typically handle, but it’s essential to keep efficiency and stability in mind.

3. What is node overhead?

Node overhead refers to the amount of resources (CPU, memory) consumed by the processes running on the node itself, separate from the workloads (pods) it hosts. This includes components like the kubelet, kube-proxy, and other system services. Node overhead is a factor when determining how much capacity is available for running pods because these system components also need to be accounted for. Kubernetes administrators should consider node overhead when planning resource allocation to ensure there’s enough room for both system services and application workloads.