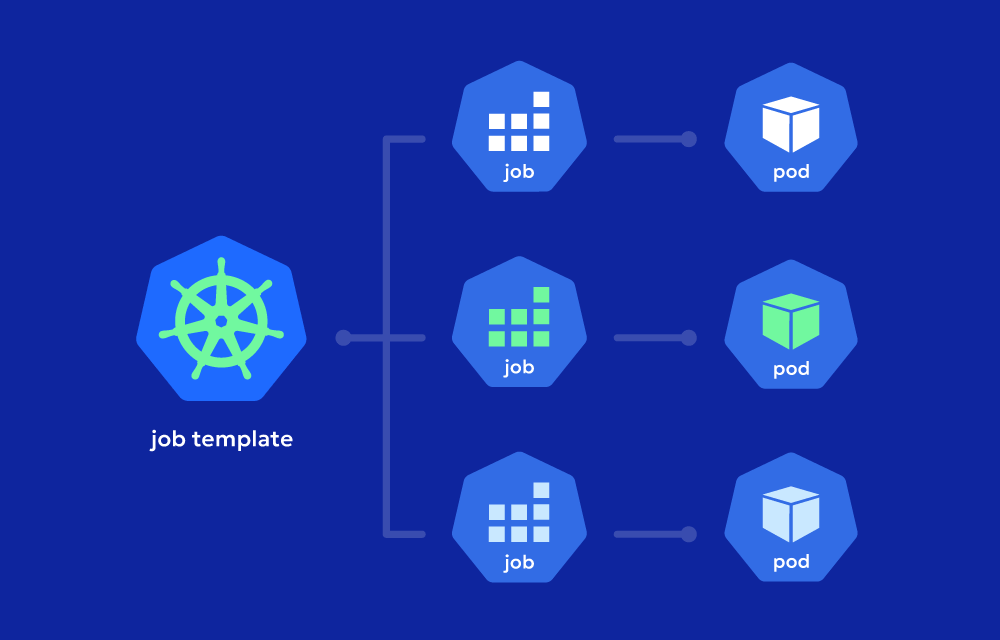

How Kubernetes Jobs Work

Kubernetes Jobs are designed for tasks that need to run to completion, such as batch processing, database migrations, or cleanup operations. When a Job is created, Kubernetes launches one or more pods to perform the task, and the Job continues until a specified number of successful completions have been reached.

Key operational aspects

- Pod Management:

The Job controller manages the pods created by the Job. If a pod fails during execution, the controller automatically starts another pod to replace it. This ensures the Job reaches completion despite potential pod failures. - Parallelism:

Kubernetes Jobs can run multiple pods in parallel to speed up processing. You can specify how many pods should run simultaneously using theparallelismsetting, allowing tasks like data processing to be split into smaller, parallel workloads. - Completion and Restart Policies:

Jobs can be configured to terminate after the tasks are completed or to retry in the case of failures. The number of retries and completion conditions can be customized to ensure reliability and fault tolerance.

Types of Kubernetes Jobs

- Single-Completion Job:

This is the most basic type of Job, where a pod is created to perform a single task, and the Job is marked as complete when the task finishes successfully. - Parallel Jobs:

Parallel Jobs allow multiple pods to work simultaneously to process tasks more quickly. This is useful when you need to divide a large job into smaller tasks that can run concurrently. - Indexed Parallel Jobs:

Indexed Parallel Jobs allow each pod in a parallel job to have an index, enabling the pods to work on different segments of the job. This is useful for scenarios where each pod needs to process a specific partition of the data. - CronJob:

A CronJob is a specialized Job that runs on a scheduled basis. Similar to a Unix cron job, it triggers Jobs at regular intervals or specific times, automating routine tasks like backups, cleanup operations, or periodic data analysis.

Example of a Kubernetes Job

Here’s a basic example of a Kubernetes Job definition that runs a pod to perform a data processing task:

apiVersion: batch/v1

kind: Job

metadata:

name: data-processing-job

spec:

template:

spec:

containers:

- name: data-processor

image: my-data-processor:latest

command: ["python", "process_data.py"]

restartPolicy: OnFailure

backoffLimit: 4

What happens in this example?

- A pod named

data-processorruns a Python script (process_data.py) using an image. - The restartPolicy is set to

OnFailure, meaning the pod will be restarted if it fails. - The backoffLimit is set to 4, meaning Kubernetes will retry the Job up to 4 times before marking it as failed.

Use Cases

- Data Processing:

Kubernetes Jobs are commonly used for data processing tasks where a batch of data needs to be processed and the task needs to run to completion. For example, processing logs or transforming datasets can be performed using a Job. - Database Migrations:

Jobs are well-suited for performing database migrations where changes need to be applied once and tracked for completion. - Backup and Cleanup Tasks:

Jobs are ideal for running one-off backup operations or performing cleanup tasks like removing unused resources or archives after they reach a certain age. - Cron Jobs:

With CronJobs, Kubernetes can automate recurring tasks like backups, generating reports, or performing health checks, running these jobs at regular intervals without the need for manual intervention.

Advantages

- Reliability:

Kubernetes Jobs ensure tasks are completed successfully, even in the event of pod failures. Jobs will retry tasks according to the defined policies, offering fault tolerance and resilience. - Parallelism for Efficiency:

Jobs allow tasks to be processed in parallel, increasing efficiency for data processing or large workloads. This parallelism helps speed up execution time for time-sensitive tasks. - Automatic Cleanup:

Once a Job completes, Kubernetes can automatically clean up the completed or failed pods to free up resources, ensuring the cluster isn’t weighed down by unnecessary pods. - Scheduling with CronJobs:

CronJobs allow for the automation of recurring tasks, helping to reduce manual intervention for routine processes like backups or periodic data tasks.

Challenges

- Resource Management:

Running many parallel jobs simultaneously can place significant demands on cluster resources. Proper monitoring and resource allocation are necessary to avoid overloading the cluster. - Complex Retry Logic:

Complex tasks might require fine-tuning of the retry and backoff policies to handle various failure scenarios appropriately, especially when dealing with external systems. - Monitoring and Observability:

While Jobs ensure tasks run to completion, it’s important to implement good monitoring practices (using tools like Prometheus) to track progress, spot failures, and understand performance bottlenecks in Jobs.