Why cgroups Matter in Kubernetes

You can define CPU and memory limits in your pod specs—but it’s cgroups that make sure those limits are real. Without cgroups, containers could:

- Use unlimited memory and crash your node

- Starve other workloads by consuming all CPU cycles

- Evade resource governance and wreck multi-tenant clusters

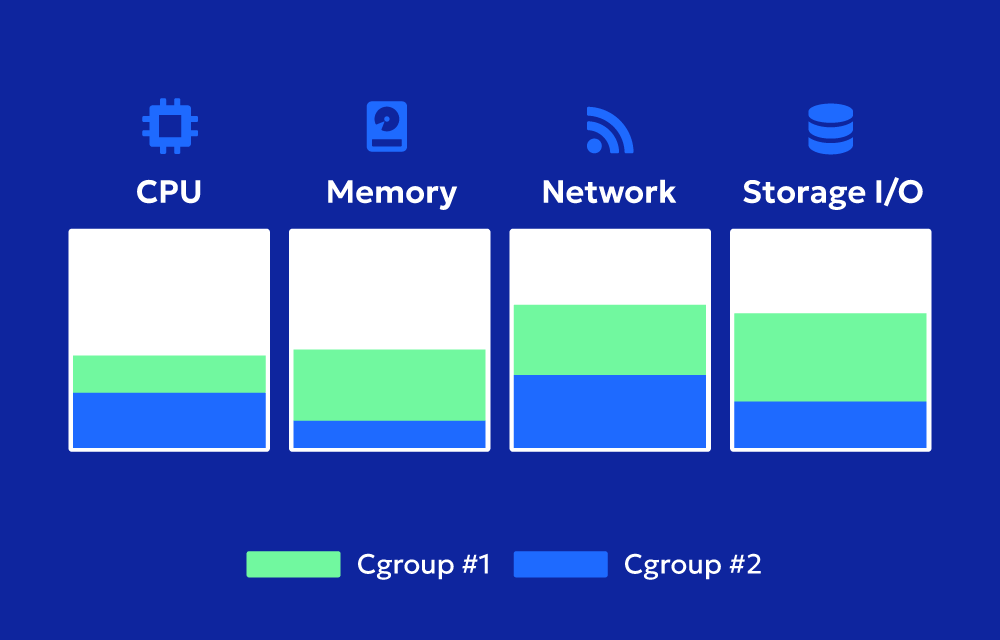

Thanks to cgroups, Kubernetes can isolate workloads and enforce fair resource allocation.

How Kubernetes Uses cgroups (Under the Hood)

Let’s break it down:

- You define resource requests and limits in a pod:

resources: requests: cpu: "250m" memory: "128Mi" limits: cpu: "500m" memory: "256Mi" - The kubelet reads this config and passes it to the container runtime (e.g.,

containerdorCRI-O). - The runtime creates a cgroup for each container using those values.

- The Linux kernel uses that cgroup to:

- Guarantee 250 millicores of CPU

- Throttle CPU at 500m (if needed)

- Reserve 128 MiB of RAM

- OOM-kill the pod if it exceeds 256 MiB

You never need to interact with cgroups directly—but they’re always working behind the scenes.

Example: What Happens When Memory Limit Is Exceeded

You set:

limits:

memory: "256Mi"

If your application suddenly spikes and uses 300 MiB of RAM:

- The container is terminated by the kernel

- Kubernetes logs it as an

OOMKilledevent - The pod may restart, depending on the restart policy

All of this happens via cgroups.

You can check the pod’s status:

kubectl describe pod myapp | grep -A 5 "State:"

And view throttling via:

bashCopyEditkubectl top pod myapp

How Kubernetes Organizes cgroups

Kubernetes organizes cgroups by QoS (Quality of Service) classes:

| QoS Class | When It’s Assigned | Enforcement Behavior |

|---|---|---|

| Guaranteed | Requests = Limits for all resources | Strongest cgroup guarantees |

| Burstable | Requests < Limits (or only one defined) | Limited enforcement (burstable) |

| BestEffort | No requests or limits defined | No guarantees, lowest priority |

The more precise you are with resource definitions, the more cgroup protections you get.

cgroups v1 vs v2 in Kubernetes

As of Kubernetes v1.25+, most distros now support cgroups v2—a modernized, unified interface.

- cgroups v1: Legacy, multiple hierarchies per resource

- cgroups v2: Cleaner, more consistent, stricter enforcement

Kubernetes supports both, but cgroups v2 is recommended for:

- Better memory management

- More accurate CPU throttling

- Simpler introspection

Want to check which one your node is using?

stat -fc %T /sys/fs/cgroup

- Returns

cgroup2fsfor v2 - Returns

tmpfsorcgroupfor v1

Best Practices for Developers

Always define requests and limits

This gives Kubernetes what it needs to enforce cgroups effectively. Skipping this can result in BestEffort pods, which get evicted first under pressure.

Understand CPU throttling

If you set a CPU limit and your app uses more, the kernel will throttle it—slowing down your container without crashing it. Watch for this in performance-sensitive apps.

Use monitoring tools

Tools like:

kubectl top- OpenCost

- Prometheus + cAdvisor

- Kube-state-metrics

…can reveal when containers are hitting cgroup limits.

✅ Don’t mix workloads with different QoS on the same node

A Guaranteed pod will out-prioritize a BestEffort one every time. Keep production workloads isolated.

Troubleshooting cgroup-related Issues

Symptom: Pod gets OOMKilled

→ Cause: Memory limit was hit and enforced by cgroup

→ Fix: Raise memory limit or optimize your app’s usage

Symptom: Pod runs slowly under load

→ Cause: CPU limit triggered throttling via cgroup

→ Fix: Raise CPU limit or remove it to allow bursting

Symptom: Node pressure and random evictions

→ Cause: Too many BestEffort pods, no resource guarantees

→ Fix: Add requests to critical pods to move them to Burstable or Guaranteed QoS

Summary

cgroups are the core mechanism Kubernetes uses to turn resource requests and limits into actual, enforced system behavior.

They ensure that your containers stay in their lane—protecting the performance and stability of the entire cluster. You don’t need to manage cgroups directly, but understanding them gives you superpowers for debugging and fine-tuning your workloads.