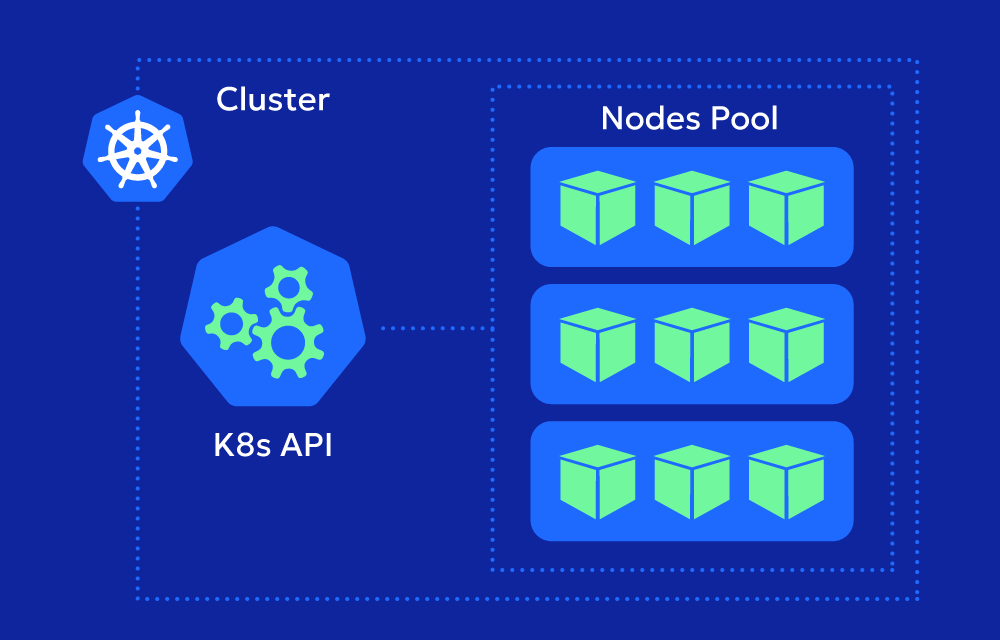

The Kubernetes Horizontal Pod Autoscaler (HPA) automatically adjusts the number of pods in a workload according to demand, based on observed resource usage, such as CPU, memory, or custom metrics.

While it is a powerful mechanism, the typical configuration observed in the wild often reacts too slowly to steep load spikes.

This lag can lead to degraded performance or even downtime.

Our goal is to share practical, battle-tested strategies to make HPA react faster without introducing instability or excessive costs.

The focus is on actionable configurations you can implement right away.

Understanding the HPA Evaluation Delay

The 15-second HPA sync period

By default, the HPA controller checks metrics and decides whether to scale every 15 seconds. This interval is not configurable per HPA, and is defined globally as a flag to kube-controller-manager. If you are using a managed Kubernetes distribution such as EKS or AKS, you have no control over over this interval.

This adds 0-15s (average 7.5s) to your scaling delay in addition to the existing delay of creating nodes, pulling the image, etc. Some configurations in the HPA such as scaleUp behavior can make it even worse.

Dealing with Default ScaleUp Behavior

How default scaling works

Without tuning, HPA may only increase pods by up to 2× per evaluation cycle.

Default HPA scaleUp behavior can be found in this link.

If you have 10 pods and need 80, the HPA adds a significant delay.

Initial scaling of 10->20 will be done in the closest evaluation cycle, on average after 7.5s.

The 20->40 scaling will happen 15s later, and 40->80 another 15s later.

On average the HPA will add 37.5s to the delay.

To remove this delay you should override the scaleUp behavior of the HPA and allow more aggressive scaling.

Default behavior often results in service degradation during large steep spikes because capacity scales too slowly.

Controlling scale velocity with spec.behavior.scaleUp

You can explicitly set scaleUp rules to control how aggressively HPA adds pods.

Example 1: Percentage-based scaling

behavior:

scaleUp:

policies:

- type: Percent

value: 300

periodSeconds: 15

selectPolicy: Max

This configuration allows pods to quadruple within 15 seconds.

Example 2: Absolute pod count increase

behavior:

scaleUp:

policies:

- type: Pods

value: 10

periodSeconds: 60

selectPolicy: Max

This configuration allows adding up to 10 pods per minute.

Key settings:

selectPolicy:

Maxchooses the most aggressive allowed change.Minchooses the most conservative.

stabilizationWindowSeconds: How far back HPA looks at past recommendations to prevent oscillations. Lower values mean faster reaction.

You should set this to 0 for the scaleUp policy.

Best practice:

- Use aggressive settings for workloads with unpredictable, bursty traffic where uptime is critical.

Use conservative settings for predictable, stable workloads to control costs.

Predictive Scaling and the “Rate of Change to Overscale”

You should think of your application as running two “modes”:

- Peaceful mode: Demand is relatively stable; capacity changes gradually.

- Emergency mode: Rare, steep demand growth where you must scale far ahead of current usage to avoid outages.

Overprovisioning for a short time in emergency mode is far less costly than downtime.

The rate of change approach

The vanilla approach to resource-based scaling is to look at current resource usage and change the replica count so that given the current load on the application, its resource utilization will equal the target resource utilization.

The problem with this approach is the following:

During a spike the usage is growing and is likely to continue to grow.

The data indicates that the near-future load will be higher, yet instead of using this data to create pods in advance, you wait until the last moment to create them.

Ideally you would like to incorporate the rate of change into account.

You could extrapolate the resource usage into the near future and immediately choose the replica count according to the expected usage in the near future.

This can be achieved in Keda by using a Prometheus scaler with a promql query that uses the metric for the raw resource and crafts an expression that takes into account both current usage, as well predicted near-future usage to accommodate for both peaceful and emergency mode.

This can be achieved for example using the following pseudo Promql query:

avg(

max(

predict_linear(rate(container_cpu_usage[15s]), 60),

rate(container_cpu_usage[15s])

) by (workload, pod, container)

) by (workload, container)

The query compares the current CPU usage of each container to its predicted usage 60 seconds later, takes the higher value, and then averages those values across all pods in the workload.

Building Headroom into Scaling Decisions

Lower targetUtilization in HPA

Reducing metrics.containerResource.target.averageUtilization ensures that the workload constantly maintains a percentage-based buffer of resources on top of the resources needed for the current demand.

For example:

An HPA configured with averageUtilization of 80% ensures that if the aggregate demand on the workload is 10vCPUs, then the resource allocated will be 12.5vCPU (12.5 * 0.8 = 10), i.e: always have 25% more resources than you use.

Reducing the averageUtilization to 50% will change the allocated resource to 20vCPU, and ensuring you always have twice the resources you currently use.

An additional benefit of setting low averageUtilization is the “overscaling” of the application during a spike.

If you set averageUtilization to 50% and the load on the application has increased by 5vCPU, you will get 10vCPUs of resources to the workload that will help you handle the further increase in load of the spike.

Essentially the averageUtilization serves as a percentage based overprovisioning and overscaling.

Minimum replicas (minReplicas)

If instead of using a percentage based buffer, you want to maintain a constant baseline (for example due to an extremely variable and spiky workload) you can use the minReplica field of the HPA.

This can be very costly but ensures availability until you pass the baseline, regardless of the scaling speed of your application and infrastructure.

Optimizing Pod Readiness for Faster Scale-Up

Even if the application is created and finished setting everything up, it is useless until the pod is marked as Ready, because traffic will not be routed to it (unless it receives work from a message queue/polling)

Startup probe configuration

Ensure startup probes allow pods to become Ready as soon as possible.

Don’t confuse startup probes with readiness probes. Configure a small scraping interval for faster readiness detection.

Scraping interval

The scraping interval of the probe is configured using the periodSeconds field of the startup probes.

For example:

startupProbe:

httpGet:

path: /health

port: 8080

periodSeconds: 2

If you set a high interval, for example 10 seconds, and your application finished bootstrapping a moment after the last run, you will have to wait 10 seconds until your application will start to handle traffic and offload work from the other pods in the workload.

Balancing Cost and Responsiveness

You can use all of the techniques mentioned above to improve the scaling of your workload.

Most of these techniques have a side effect of increasing costs.

You should combine these techniques based on the characteristics of your load and infrastructure, as well as the SLA of the application, and find the sweet spot between cost and responsiveness.

These factors change constantly, so you should continually iterate and base your decisions on historical patterns and stress tests that simulate expected worst-case scenarios.