Resizing pods in Kubernetes has always been tricky. Adjusting CPU or memory involves restarting pods, triggering rollouts, and hoping the cluster has enough capacity because visibility is normally limited. That’s far from ideal, especially stateful workloads, where a restart often involves downtime unless clustered correctly.

InPlacePodResize changes that, allowing you to adjust a pod’s resource requests while it’s still running without prompting a restart.

In this guide, we’ll go over how it works, when to use it, and how to apply it safely without running into the common pitfalls.

What In-Place Pod Resize does and when to use it

InPlacePodResizing is a Kubernetes feature that lets you modify a pod’s CPU and memory requests while it’s running, without evicting or restarting it. If you’re running anything long-lived, sensitive to restarts, or just tired of bloated CPU requests, this feature is gold.

Use InPlacePodResizing when:

- You’re optimizing workloads based on real-time metrics (e.g., via Prometheus or VPA)

- You want to avoid pod churn

- You’re running batch jobs or stream processors with variable workloads

You’re doing manual rightsizing but don’t want to restart - You need to temporarily increase resources for a pod handling a short-term spike (like an ingestion job or a one-time report)

- You’re fine-tuning resource usage for performance testing without redeploying multiple times

- You want to lower overprovisioned requests across the cluster without risking downtime

- You’re running stateful services (like Kafka, Redis, or PostgreSQL) that should not be restarted just to change CPU/memory

- You’re experimenting with different resource profiles to find the sweet spot for production traffic

- You’re supporting applications with unpredictable workloads and want to adapt quickly without triggering rollout logic

- You’re running workloads in edge or limited-resource environments and need precise control over allocation

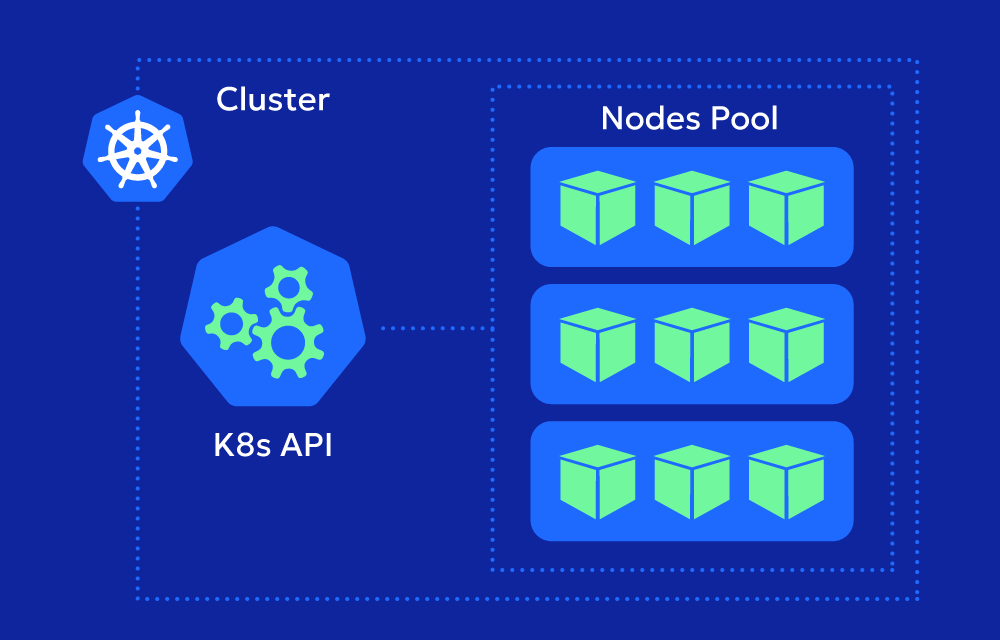

How it works: The short version

Each container is assigned:

requests: Minimum guaranteed resourceslimits: Maximum allowed usage

With InPlacePodResizing:

- ✅ You can update

requestslive - 🚫 You cannot update

limitswithout triggering a restart - ✅ The pod keeps running without recreation

- 🚫 You can only perform in-place resizing on individual pod objects. Changes made through higher-level resources (like Deployments or StatefulSets) will trigger a full pod replacement.

What you’ll need (prerequisites)

To use InPlacePodResizing, make sure:

- You’re running Kubernetes v1.33+, where the feature is in beta

- The InPlacePodVerticalScaling feature gate is enabled (usually by default on managed clusters)

You patch the pod directly, not its parent deployment

Step-by-step: How to resize a pod in place

Step 1: Find the Pod

kubectl get pods -n your-namespace

Step 2: Verify the Change

Don’t just trust it—check the pod’s allocated resources.

kubectl get pod my-app-pod -o yaml | grep allocatedResources -A 5

Look for:

status:

allocatedResources:

cpu: "750m"

memory: "512Mi"

⚠️ Pitfalls to Watch Out For

Here’s where most people get tripped up:

1. Updating the deployment? Nope.

If you patch the deployment or StatefulSet, Kubernetes will recreate the pods from scratch. You have to patch the pod directly.

2. No error doesn’t mean success

If the node doesn’t have the requested resources, the change will be ignored silently. Check status.allocatedResources, not just the spec.

3. Controllers may override your change

If your pod is managed by a controller (ReplicaSet, StatefulSet, etc.), the next reconciliation cycle might reset your patch.

How to use this at scale

Manually resizing pods is fine for one-off experiments. But in the real world, you’ll want automation.

Here’s how to scale it up:

- Use VPA (Vertical Pod Autoscaler) in recommendation-only mode to identify ideal resource configs.

- Pull Prometheus metrics to detect under/overprovisioned pods.

- Add a safety check to make sure requests don’t exceed node capacity.

- Write a script that patches pods based on CPU/mem usage deltas. The general strategy looks like this:

- Loop through running pods

- Check their current CPU and memory requests

- Compare those values to your thresholds or external signals

- Patch the ones that need adjustment.

You can build this logic into a script, run it as a cron job, or wrap it in a controller. If you want a working example, scroll to the bottom of the article for a complete Python script you can adapt.

Can you use In-Place Pod Resize in production?

Yes, absolutely, but with a few

Use it when:

- You need live tuning without disruption

- Your workload has stable Unique Idetifiers (i.e., not ephemeral jobs)

- You can tolerate manual patching or have automation in place

Avoid if:

- Your workload is short-lived or stateless (just recreate pods)

- You can’t control controller behavior

- You’re not monitoring allocated vs requested resource drift

At the end of the day, it’s worth your hassle

In-Place Pod Resize gives you a practical way to adjust resources without restarting your pods or rolling out changes across the cluster. It’s one of those features that, once you start using it, feels like something Kubernetes should have had all along.

It won’t solve everything. You’ll still need to be thoughtful about when and how to use it. But if you’re running stateful workloads, fine-tuning performance, or just trying to clean up overprovisioned environments, it can make a real difference.

And if you’re looking to streamline the process even more, there are tools that can automate resizing for you, so you can focus less on manual tweaks and more on shipping code.

Full example script (for those who want to build it)

This script demonstrates how to automate in-place resizing of pods based on simple thresholds. It uses the Kubernetes Python client and kubectl patch.

⚠️ Disclaimer: This is a simplified example. For real-world use, integrate with Prometheus, the Kubernetes Metrics Server, or VPA. Add logging, error handling, and a dry-run option before applying changes in production.

Requirements:

- Python 3

Kubernetes Python client:pip install kubernetes

Script: inplace_resizer.py

from kubernetes import client, config

import subprocess

# Load kubeconfig (from ~/.kube/config)

config.load_kube_config()

# Initialize API client

v1 = client.CoreV1Api()

# Thresholds (example values)

CPU_THRESHOLD_MILLICORES = 500 # 0.5 vCPU

MEM_THRESHOLD_MB = 512 # 512Mi

NAMESPACE = "default"

pods = v1.list_namespaced_pod(namespace=NAMESPACE)

for pod in pods.items:

if pod.status.phase != "Running":

continue

pod_name = pod.metadata.name

for container in pod.spec.containers:

cname = container.name

resources = container.resources

cpu_request = resources.requests.get("cpu", "0m")

mem_request = resources.requests.get("memory", "0Mi")

cpu_m = int(cpu_request.rstrip("m")) if "m" in cpu_request else int(float(cpu_request) * 1000)

mem_mi = int(mem_request.rstrip("Mi"))

if cpu_m > CPU_THRESHOLD_MILLICORES or mem_mi > MEM_THRESHOLD_MB:

new_cpu = f"{CPU_THRESHOLD_MILLICORES}m"

new_mem = f"{MEM_THRESHOLD_MB}Mi"

print(f"Patching {pod_name}/{cname} to CPU: {new_cpu}, Memory: {new_mem}")

patch = f"""

{{

"spec": {{

"containers": [{{

"name": "{cname}",

"resources": {{

"requests": {{

"cpu": "{new_cpu}",

"memory": "{new_mem}"

}}

}}

}}]

}}

}}

"""

subprocess.run([

"kubectl", "patch", "pod", pod_name,

"-n", NAMESPACE,

"--type=merge",

"-p", patch

], check=True)