It’s a quick and dirty way to share data between the node and the container, useful for testing, bootstrapping, or accessing system-level files. But because it breaks the container abstraction and tightly couples your pod to a specific node, it comes with serious tradeoffs.

How it works

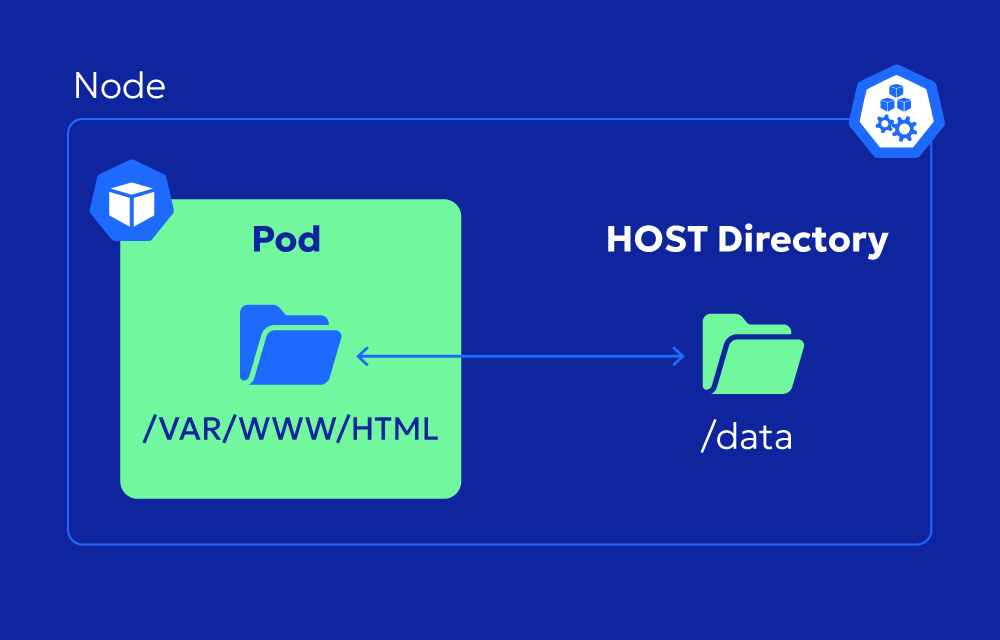

The concept is simple: you specify a path on the host and a mount path inside the container. Kubernetes mounts that host directory into the pod’s container just like a regular volume.

Here’s a quick example:

volumes:

- name: config-volume

hostPath:

path: /etc/my-config

type: Directory

volumeMounts:

- mountPath: /app/config

name: config-volume

This tells Kubernetes to mount /etc/my-config from the node into /app/config inside your container.

You can also define the type of hostPath:

Directory– must exist, and must be a directoryFile– must exist, and must be a fileSocket,CharDevice,BlockDevice– for system-level integrationsDirectoryOrCreate– create the directory if it doesn’t existFileOrCreate– create a blank file if it doesn’t exist

Kubernetes doesn’t do much validation beyond that—you’re expected to know what you’re mounting and why.

Why hostPath is risky in production

On the surface, hostPath sounds useful. You can:

- Mount log directories

- Access host-level binaries

- Share files between pods and the node

But here’s the problem: hostPath ties your pod to the physical node. That breaks Kubernetes’ entire scheduling model, where workloads should be able to run on any node in the cluster.

Once a pod depends on a specific file on a specific node, you lose flexibility:

- You can’t scale replicas across nodes

- Pod rescheduling becomes fragile

- If the node dies, the pod can’t be restarted elsewhere

And because the container can access host-level paths, it introduces security concerns, especially if the container runs as root. It’s not hard to imagine someone accidentally mounting /etc or /var/lib/kubelet and causing damage.

When it actually makes sense

Despite the risks, there are cases where using hostPath is justified:

- Local storage testing – for dev clusters or quick testing of volume mounts

- Accessing system-level sockets – like Docker socket (

/var/run/docker.sock) in CI agents - Debugging nodes – when you want to run a privileged pod to poke at node internals

- Custom monitoring agents – that need to read files like

/procor/sys

In these cases, you should:

- Use strict RBAC and PSP (or OPA/Gatekeeper) to control who can use hostPath

- Run the pod in a tainted or dedicated node pool

- Mark it clearly as node-bound in your documentation or manifests

- Avoid giving write access unless absolutely necessary

You can also pair it with nodeSelector or nodeAffinity to make sure the pod always lands on the right node—but again, that’s a band-aid for something inherently inflexible.

Alternatives to hostPath

If you’re reaching for hostPath in a production setup, ask yourself: is there a better abstraction?

In many cases, the answer is yes:

- EmptyDir – for ephemeral storage within the pod’s lifecycle

- PersistentVolumeClaims (PVCs) – for decoupled, schedulable storage

- ConfigMaps and Secrets – for config files, tokens, and small static content

- CSI drivers – for custom storage plugins that expose local or networked storage in a safer way

These options preserve Kubernetes’ portability and resilience, which is kind of the whole point.

Final thoughts

hostPath is one of those features that gives you a lot of power—but very little guardrails. It’s easy to misuse, easy to forget, and hard to scale if you’re not careful.

Use it when you’re debugging, bootstrapping, or building something experimental. But if you’re deploying production workloads, take a step back and consider whether it’s really the best tool for the job.

When you break the abstraction between the container and the host, you lose a lot of what makes Kubernetes powerful in the first place.

Related terms and tools

- PersistentVolume – for storage decoupled from node lifecycle

- ConfigMap – for mounting config files

- CSI (Container Storage Interface) – standard for custom volume drivers

- SecurityContext – restrict pod access to host resources