What problem does RollingUpdate solves?

Imagine you’re running a popular web app with thousands of users. You need to roll out a new version — but you can’t afford even a few seconds of downtime. You also can’t spin up 100% new pods at once, as that could overload your cluster. This is where RollingUpdate comes in.

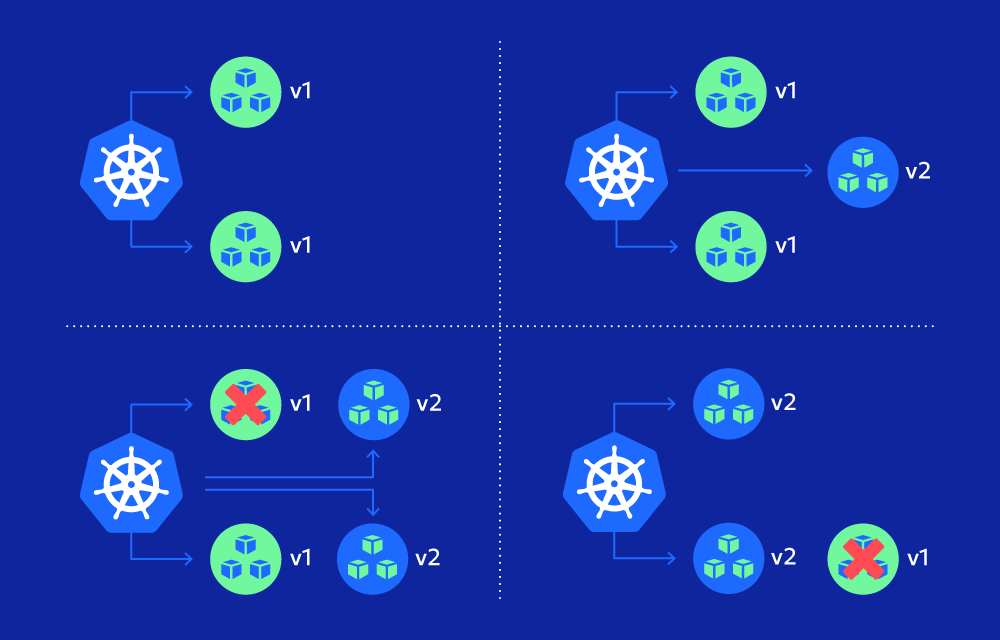

Instead of tearing everything down and starting over, Kubernetes gradually replaces pods, one or a few at a time, monitoring for health and availability after each step.

How It Works

A RollingUpdate strategy allows Kubernetes to:

- Start a new pod with the updated spec

- Wait until it becomes healthy

- Terminate an old pod

- Repeat this process until all pods are updated

This ensures your service stays up during the rollout and minimizes the risk of mass failure.

By default, it uses two important parameters:

Key Settings in RollingUpdate

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

maxSurge: 1

- maxUnavailable: The maximum number of pods that can be unavailable during the update. A value of

1means at most one old pod can be down. - maxSurge: The number of extra pods that can be added temporarily during the update. A value of

1allows one more than the desired number of pods to exist during the rollout.

Together, these settings let Kubernetes balance availability and speed.

Example

Let’s say you have a Deployment with 4 replicas:

maxUnavailable: 1maxSurge: 1

During the update:

- Kubernetes adds 1 new pod (total 5 pods)

- Once that new pod is healthy, it removes 1 old pod (back to 4)

- Repeats this step-by-step until all 4 pods are updated

Advantages of RollingUpdate

✅ Zero downtime

✅ Incremental rollout (great for catching errors early)

✅ Built-in health checks

✅ Can pause or rollback if something goes wrong

When It Might Fail

RollingUpdate depends heavily on readiness probes and robust application health. If your new pods don’t pass health checks, the rollout can stall or get stuck halfway.

Common failure scenarios:

- Bad image or broken startup logic

- Readiness probe misconfigured

- Network dependency not available yet

Kubernetes won’t kill old pods until the new ones are healthy — so if things go wrong, your app stays running, but the rollout halts.

Use Cases in Practice

Good Fit:

- Web servers

- Microservices

- APIs where backward compatibility is maintained

Not Ideal:

- Databases or workloads that require strict ordering or state migration

- Systems where multiple versions cannot run side by side

For these, consider Recreate, Blue/Green, or Canary deployments instead.

How to Trigger a RollingUpdate

Any change to the Deployment spec will start a new rollout:

kubectl set image deployment/my-app my-app=my-image:v2

You can monitor it with:

kubectl rollout status deployment/my-app

Or pause/resume as needed:

kubectl rollout pause deployment/my-app

kubectl rollout resume deployment/my-app

Summary

RollingUpdate is Kubernetes’ default and safest strategy for updating applications without taking them offline. It offers a balance between speed and reliability, giving you a smooth, controlled rollout backed by health checks and built-in rollback mechanisms.