Components of Kubernetes Clusters

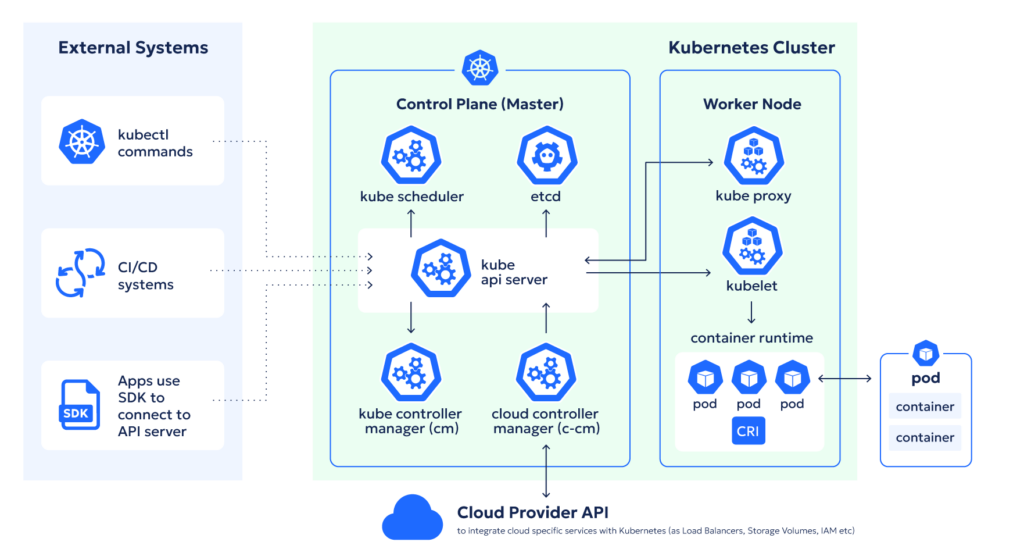

A Kubernetes cluster is composed of several critical components that work together to manage and orchestrate containerized applications across multiple hosts. These components can be broadly categorized into two groups: the Control Plane and the Worker Nodes. Understanding these components is crucial for effectively deploying, scaling, and managing applications in a Kubernetes environment.

1. Control Plane Components

The control plane is responsible for managing the overall state of the Kubernetes cluster. It orchestrates all Kubernetes activities, from scheduling and monitoring workloads to managing the network and scaling applications. The key components of the control plane include:

- API Server: The API server is the central management point of the Kubernetes cluster. It acts as a communication hub between all other components within the cluster, exposing the Kubernetes API. Users interact with the Kubernetes cluster primarily through the API server, which validates and processes REST requests. The API server also serves as the gatekeeper for accessing the cluster’s resources.

- etcd: etcd is a distributed key-value store that stores all the configuration data, state, and metadata for the Kubernetes cluster. This data is essential for maintaining the desired state of the cluster, including information about nodes, pods, secrets, and service discovery. etcd is highly reliable and consistent, ensuring that any changes in the cluster configuration are immediately reflected across all components.

- Controller Manager: The controller manager is a daemon that runs controllers, which are background processes that manage the state of the cluster. For example, the replication controller ensures that the desired number of pod replicas are running at any given time, while the node controller monitors the health of nodes in the cluster. The controller manager constantly reconciles the current state of the cluster with the desired state specified by the user.

- Scheduler: The scheduler is responsible for assigning workloads (pods) to specific nodes in the cluster based on resource availability, policies, and constraints. It evaluates the resource requirements of pods and matches them with the available resources on the nodes, ensuring efficient distribution of workloads and optimal performance.

- Cloud Controller Manager: This component is responsible for managing cloud-specific control logic, such as integrating with the underlying cloud infrastructure (e.g., AWS, Azure, Google Cloud). It allows Kubernetes to interact with the cloud provider’s APIs for tasks like provisioning load balancers, managing storage volumes, and handling node lifecycle events.

2. Worker Node Components

Worker nodes are the machines that run the containerized applications managed by Kubernetes. Each worker node contains several key components that facilitate the execution and management of these applications:

- Kubelet: The kubelet is an agent that runs on each worker node, ensuring that containers are running in pods as expected. It communicates with the control plane, receives instructions, and enforces the desired state of the node by managing pod lifecycle, monitoring their status, and reporting back to the control plane.

- Kube-proxy: Kube-proxy is a network proxy that runs on each worker node, managing network communication for pods. It ensures that each pod gets a unique IP address and provides load balancing across different pods for a given service. Kube-proxy handles the routing of traffic from outside the cluster to the appropriate pods within the cluster, as well as service discovery and connectivity within the cluster.

- Container Runtime: The container runtime is the software responsible for running the containers on each node. Kubernetes supports several container runtimes, with Docker being the most widely used, though others like containerd and CRI-O are also common. The container runtime pulls the container images from a registry, launches containers, and manages their lifecycle.

3. Networking and Storage

In addition to the control plane and worker nodes, Kubernetes clusters rely on a robust networking and storage infrastructure:

- Networking: Kubernetes networking is designed to ensure that each pod can communicate with other pods and services within the cluster. The network model is flat, meaning every pod has a unique IP address and can communicate with any other pod without Network Address Translation (NAT). Kubernetes uses various networking plugins (e.g., Calico, Flannel, Cilium) to implement this model.

- Storage: Kubernetes abstracts storage from the underlying infrastructure, allowing persistent storage to be attached to pods as needed. Storage can be local to the node, or it can be external, such as cloud-based storage solutions or network file systems (NFS). Kubernetes manages storage through persistent volumes (PVs) and persistent volume claims (PVCs), enabling data to persist across pod restarts and migrations.

Kubernetes clusters sub glossary

A

- Admin: A Kubernetes cluster admin is responsible for managing the entire cluster, including setting up, configuring, and maintaining the control plane, managing user access, and ensuring security.

Further Reading: Kubernetes Administration - Architecture: The architecture of a Kubernetes cluster includes the control plane (API server, etcd, controller manager, and scheduler) and the worker nodes. The control plane manages the cluster, while worker nodes run the containerized applications.

- Autoscaler: The Kubernetes Cluster Autoscaler automatically adjusts the number of nodes in a cluster based on resource needs. It scales up when there are unscheduled pods and scales down when nodes are underutilized.

- Autoscaler vs Karpenter: Karpenter is an open-source Kubernetes node autoscaler designed as an alternative to Cluster Autoscaler. It provides more flexible and efficient scaling options, especially for cloud environments.

- At Home: Setting up a Kubernetes cluster at home typically involves using low-cost hardware or virtual machines to create a small-scale cluster for learning or personal projects.

- Alternatives: Alternatives to Kubernetes for container orchestration include Docker Swarm, Apache Mesos, and Nomad. These platforms offer different features and complexities compared to Kubernetes.

B

- Backup: Backing up a Kubernetes cluster involves creating snapshots of critical components like etcd to ensure the cluster state can be restored in case of failure.

- Backup Tool: Tools like Velero and Kasten K10 are commonly used for backing up and restoring Kubernetes clusters.

- Behind Proxy: Configuring a Kubernetes cluster behind a proxy involves routing cluster traffic through a proxy server for security or regulatory compliance.

- Basics: The basics of a Kubernetes cluster include understanding the core components (like nodes, pods, and services), the architecture, and the fundamental concepts of container orchestration.

- Binding: ClusterRoleBinding in Kubernetes binds a ClusterRole to a user, group, or service account, granting them permissions at the cluster level.

- Bootstrap: Bootstrapping a Kubernetes cluster involves the initial setup and configuration of the cluster components, often using tools like kubeadm.

- Build: Building a Kubernetes cluster involves setting up the required hardware or virtual resources, installing Kubernetes components, and configuring network and storage layers.

- Blue-Green Deployment: A blue-green deployment strategy involves running two identical environments (blue and green) and switching traffic between them to minimize downtime during updates.

C

- CIDR: Cluster CIDR is a range of IP addresses assigned to pods in a Kubernetes cluster, defining the IP space used for pod networking.

- Certificate: Kubernetes uses certificates for secure communication between the API server and nodes. Proper management of these certificates is crucial for cluster security.

- CA Certificate: The CA (Certificate Authority) certificate is used to sign other certificates within the Kubernetes cluster, ensuring secure communication.

- Context: In Kubernetes, context is a set of access parameters used by

kubectlto interact with a specific cluster, including the API server address, user credentials, and the namespace. - Cost: The cost of running a Kubernetes cluster varies depending on factors like the number of nodes, cloud provider, managed services, and additional features like monitoring and security.

Further Reading: Kubernetes cost optimization strategies - Commands: Common Kubernetes commands include

kubectl get,kubectl describe,kubectl create, andkubectl apply, which are used to manage cluster resources. - Configuration: Configuring a Kubernetes cluster involves setting up the components, defining network policies, managing storage classes, and configuring security settings.

- Creation Steps: Creating a Kubernetes cluster involves steps like setting up the control plane, joining worker nodes, configuring networking, and deploying necessary services.

D

- DNS: Kubernetes has an internal DNS service that automatically assigns DNS names to services, enabling pods to communicate with each other using domain names.

- Dashboard: The Kubernetes Dashboard is a web-based UI for managing and monitoring the cluster, viewing workloads, and managing resources.

- Deployment: Deployment in Kubernetes refers to the process of managing containerized applications across nodes, ensuring the desired state is maintained.

- Deployment Tools: Tools like Helm, Kustomize, and ArgoCD help automate and manage complex deployment processes in Kubernetes clusters.

Further Reading: Kubernetes Deployment Tools - Diagram: A Kubernetes cluster diagram visually represents the architecture, including components like the control plane, worker nodes, and networking layers. See below

- DNS Name: The DNS names assigned to services within a Kubernetes cluster allow them to be accessed by other services and pods using human-readable names.

Further Reading: Kubernetes DNS - Domain: The cluster domain is a DNS domain name used to access services within the Kubernetes cluster, typically suffixed with

.cluster.local. - Firther reading: Kubernets Domain name

E

- Endpoint: A Kubernetes cluster endpoint is a set of IP addresses or DNS names that expose services running within the cluster to external users or systems.

Further Reading: Kubernetes Endpoint Slices

Further Reading

If you want to dive deeper into Kubernetes clusters and the associated concepts, you can explore the official documentation and authoritative resources provided by Kubernetes, AWS, and Azure.